Project

Neural Network Quantization Experiments

keywords: squeezeNet, resNet 18/34/50, model quantizaton, post traning quantization, pytorch

date: Sep.2021

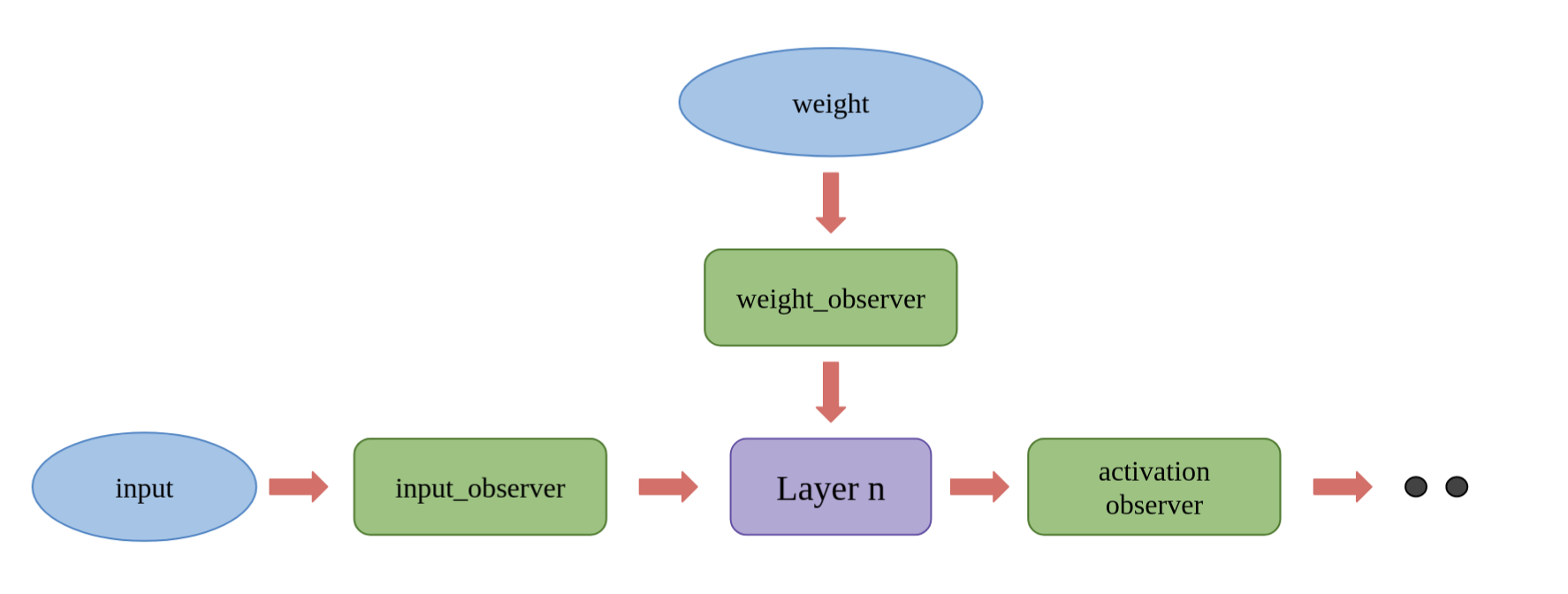

Nowadays in deep learning field, using parameters with 32-bit floating point format is the mainstream, also Known as FP32. Despite the higher accuracy from the FP32 format, the training is computationally expensive. Model Quantization will significantly reduce the bandwidth and storage of the neural network, which brings the possibility of implementing the model on those devices without GPU. Research has proved that weights and activations can be represented using 8-bit integers (or INT8) without incurring significant loss in accuracy. The principal idea of Parameter quantization is to train a quantizer that learns a mapping function between input and output, so that such function will convert a larger set of input values into a smaller set of output value. Currently both newest Pytorch (1.9.0) and tensorflow (2.4.0) can provide user-friendly model quantization functions.

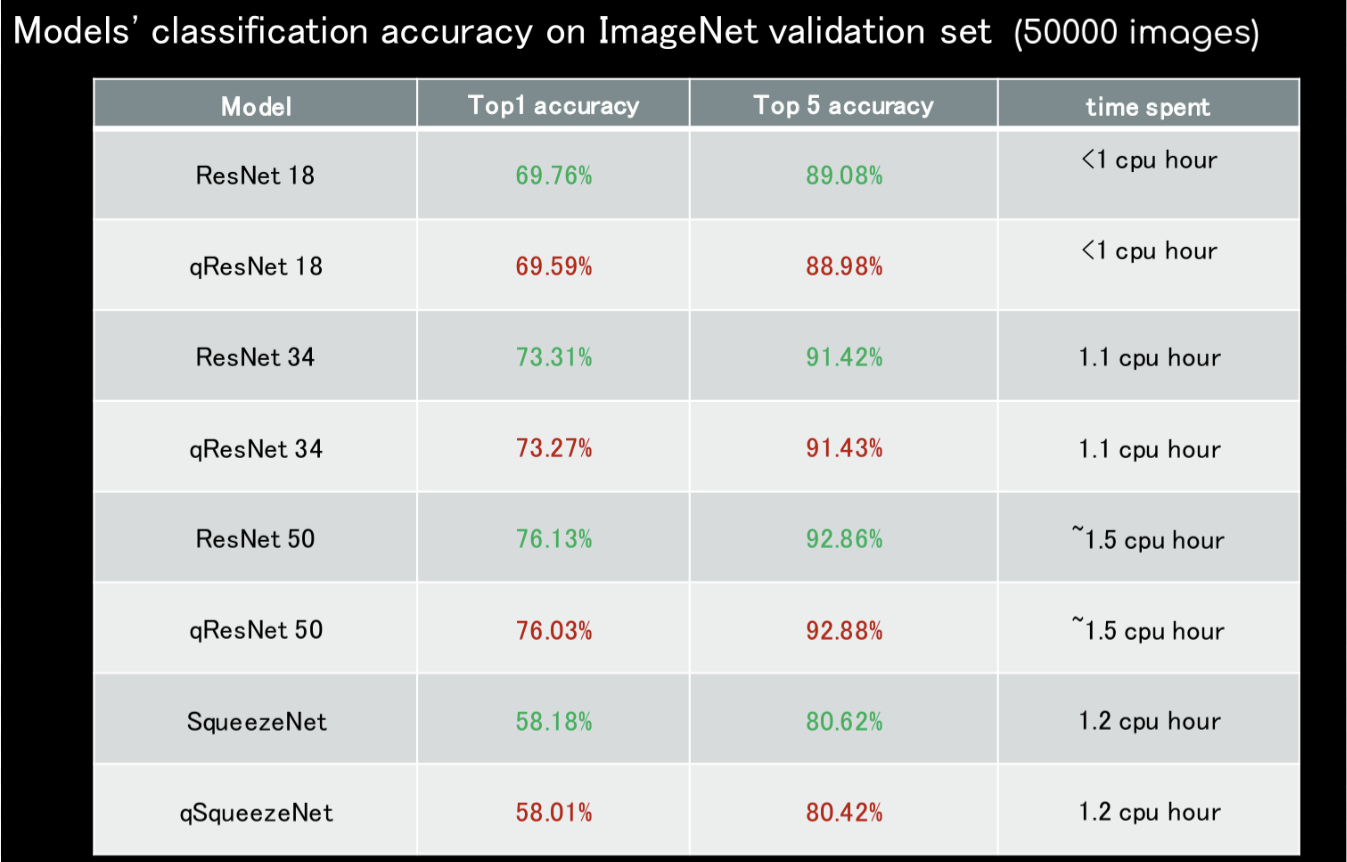

Considering that so far all the quantization frameworks are beta version. We build a model quantization library base on pytorch (1.9.0), which provide us more flexibilities in observer selection, model fusing and quantization hyperparameter searching. Importantly, our quantized models have better performance than other resources. We conduct post traning quantization experiments on SqueezeNet, ResNet18/34/50; then check the qModels' classification accuracy on ImageNet validation set. The results are shown in the below figure. By using int8 parameters, we lost less than 0.25% accuray. Super cool work, isn't it?

SSD-ResNet50 Object Detection and Quantization

keywords: SSD, resNet50, object detection, neural network quantization, pytorch, coco2017

date: Sep.2021

SSD object detection

ResNet50 Quantization

keywords: ResNet50, neural network quantization, pytorch, ImageNet

date: Sep.2021

ResNet50